Like so many of my peers in the SEO community, I read an article on Technical SEO on SearchEngineLand and was immediately disappointed this article even made it through the quality control of their editorial team. The article was so full of nonsense and bad advice for non-SEO experts. Luckily a lot of SEO’s expressed their anger and frustration with the article, and SELand decided to ask one of them to write a rebuttal. Go read it here, as it has a lot of good stuff in there.

For 3 weeks, I’ve been meaning to write my own, explaining my view on what Technical SEO is, and how you can use it. For 2.5 weeks, I let it go, as I it would take me a lot of time to write it, it’s that extensive, and there would not be a lot to gain from it.

But then I thought, “No, let’s write it, as it can help me getting back into writing and inspire others to think different about Technical SEO”. One thing I’m not trying to do with this article, is trying to change Clayburn Griffin’s opinion about Technical SEO. From the interactions I’ve seen on Twitter, it’s clear to me he has little room to open his imagination and see what others are trying to tell him.

One of the reasons why Clayburn might have this opinion of Technical SEO, is because he’s on the agency side, where, most probably, he has little to no impact on the technical side of the websites he’s working on, let alone set a strategy for the product or design the product itself. As an in-house SEO, I’ve been able to influence the product roadmap to incorporate SEO best practices, or even get a full product & engineering team working with me on dedicated SEO infrastructure.

But first, am I qualified to talk about Technical SEO? I think I am, as my credentials are:

- Former Director Global SEO at eBay

- Former head of Global SEO at Airbnb

- Current Vice President Growth & SEO at Fanatics

In these three functions, I’ve played around with a lot of sites, different code bases and product processes or content on the site. All three companies have different SEO challenges, all three companies have huge SEO opportunities. With all three companies I’ve been able to help steer the conversation within towards adoption Technical SEO as well as high quality content campaigns. At Airbnb, the SEO team had direct access to the production code on Github, rolling changes directly into the production environment. Right now at Fanatics, we are working on dedicated new features within the platform which powers ~340 different sports merchandize webstores. At eBay, we worked on a complicated SEO SaaS technology, with dedicated engineers, product managers and data science team.

This blogpost will focus on the eBay SEO SaaS system, as it’s highly technical, delivered a lot of upside for eBay, and I left ~5 years ago, which makes it possible for me to speak about this system, especially as it’s no longer integrated in the site (components might still be in use).

SEO Framework: LUMPSS

For years I’ve used a framework to describe the SEO projects across the organizations I’ve worked at. It has served me well over those years, since it breaks down the SEO projects in bite size components, and outlines the objectives why a certain project is important for SEO.

I’ve described the LUMPSS framework in more detail here, which is also one of the buildings blocks for my other framework I’m currently building out, The Content-Brand Pyramid.

For the sake of this blogpost, the focus is primarily on the Links in the LUMPSS framework. We, as SEO’s, all know the power of getting more links pointed at your website. For years, many SEO’s have focused on ways to get more links to dominate the SERP’s. The LUMPSS framework does not only mentions links from other websites as important, external links, but also puts emphasis on internal links. Internal links are under full control of the website owner/product team, and can be used to enhance the site hierarchy, topical relevance of pages/sections on the site, and page rank flow for SEO.

Through the optimization of Internal links, one can easily try to push those pages you would like to rank higher in search engines. Which brings me to…

Theory: Second Page Poaching for Massive Traffic Gain

I first read about 2nd page poaching on the Google Cache, as it was introduced by Russ Jones. The old post from 2008 is still available, but is lacking images describing the win of traffic you could generate when you focus on this technique.

Russ describes the technique as:

the coordination of analytics (to determine high second-page rankings) with PR flow and in-site anchor-text to coax minor SERP changes from Page 2 to Page 1.

If you do this right, the traffic increase you could get, pushing a page from the 2nd page of a Google search to the first, can be a huge step change. And if you can do it at scale, many keywords/page, it can be transformational for any business.

This was already the case in 2008, but I believe it’s even more so in the world with instant search and search suggest. More and more, search engine users rather refine their query, then click to page 2 of a search result. The need to be on page 1 to get any traffic is higher than ever.

Internal Link Optimization #FTW

With a website like eBay, which is a beast in itself, there is a lot of raw PageRank to work with. Just image when we started to execute on this strategy back in 2009. The domain ebay.com was registered back in 1995, and had accumulated a lot of external links over the years. I always say that I have one big black spot in my SEO knowledge, which is link building. Working for a site like eBay, you don’t have to actively do link building, as you already get so many external links to the site naturally, at least when I worked there. Point is to not screw it up, and to work with the materials you have.

Enter, Internal link optimization at scale. With the 2nd page poaching theory, if you could flow the PageRank to those pages you would like to push onto the first page of a SERP using internal links, for a site like eBay it’s rather easy to start ranking with a lot more pages.

So if a user’s browser would request a search result page on eBay for the keyword Apple iPod, the system would integrate 6 different relevant links on the page.

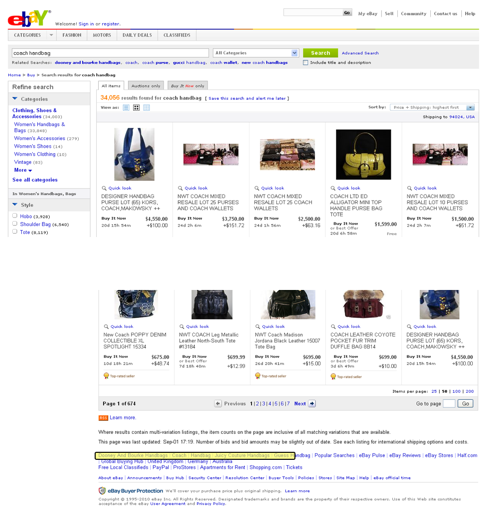

See the integration below here on a eBay page at the time:

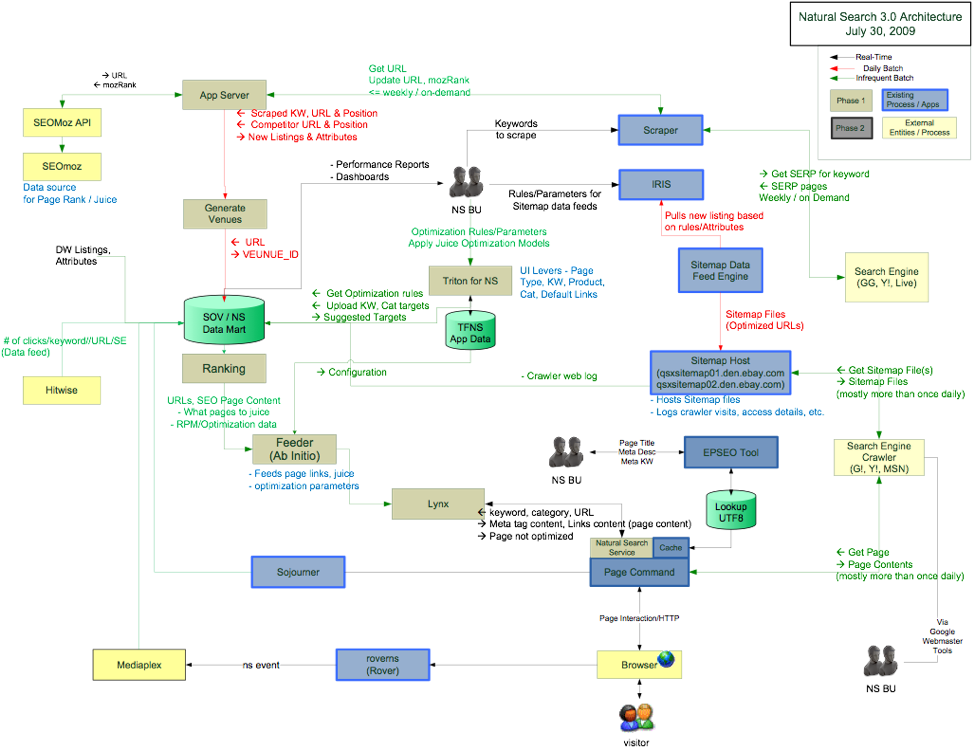

Below is the technical blueprint for the service. It worked like this:

- As an eBay user would browse through the site, the browser would request the page.

- As the page would load, a request would be sent to our service with some information attached. This information would contain;

- Keyword or product category page

- Page type (search, browse or item)

- Based on these inputs, the service would return back a package of XML which would be implemented on the page, with 6 internal links

- The links would be determined based on the inputs, where only relevant links to the keyword or product would qualify

A number of different technologies or coding languages were used;

A number of different technologies or coding languages were used;

- Majority of the system was build in C++, based on services talking with each other

- Java-layer service to integrate with the core website, as eBay is/was written in Java

- PHP for the back end interface where the team managing the optimization could enter new keywords

- Primarily MySQL databases, where information was being pulled from external sources and internal (eBay) sources

- Built as a platform, so new services would be able to integrate for new features

The scale of the system was huge, as it was integrated directly into the eBay core pages there was no room to fail. At the launch of the link optimization platform, it was only integrated on the search- & browse pages, but as it moved onto the item page, the capacity had to be extended. Initial investment in servers in the data centers was already $800K, just to plugin a couple of extra boxes, while we didn’t even had tried the system yet.

Some performance numbers when it was up and running;

- 2 billion asynchronous server-to-server requests a day

- Response time 4 milliseconds, where we had an SLA of 15 milliseconds

- Co-located in 3 data centers

The Efficient Frontier; How Investment & Portfolio Theory Can Help with Internal Link Optimization

Now for the analytics on how to pick the right page to link to. With a large paid search budget, eBay was always a pioneer in the paid search ads. As early as 2005, eBay built an in-house platform, which used analytical concepts from modern portfolio investment theory to optimize their budget.

The concept of the Efficient Frontier is still to this day used in paid search campaigns to determine where you should spend your next dollar to get the highest return.

Here is a short overview of the concept of the Efficient Frontier from Wikipedia, but for the scope of this article, I will not go deep into the theory and how this could be applied to paid search.

A combination of assets, i.e. a portfolio, is referred to as “efficient” if it has the best possible expected level of return for its level of risk (which is represented by the standard deviation of the portfolio’s return). Here, every possible combination of risky assets can be plotted in risk–expected return space, and the collection of all such possible portfolios defines a region in this space. In the absence of the opportunity to hold a risk-free asset, this region is the opportunity set (the feasible set). The positively sloped (upward-sloped) top boundary of this region is a portion of a hyperbola and is called the “efficient frontier.”

If a risk-free asset is also available, the opportunity set is larger, and its upper boundary, the efficient frontier, is a straight line segment emanating from the vertical axis at the value of the risk-free asset’s return and tangent to the risky-assets-only opportunity set.

It’s about finding that sweet spot, where your ROI on the investment is optimal for the curve you’re on. If you would get another dollar to invest, where would you spend it to get the highest ROI on that dollar?

Now, just imagine you replace the money investment with your sites PageRank, and go figure where you will spend that next PageRank on to push the pages up in the SERP’s, and get the highest ROI on your PageRank distribution.

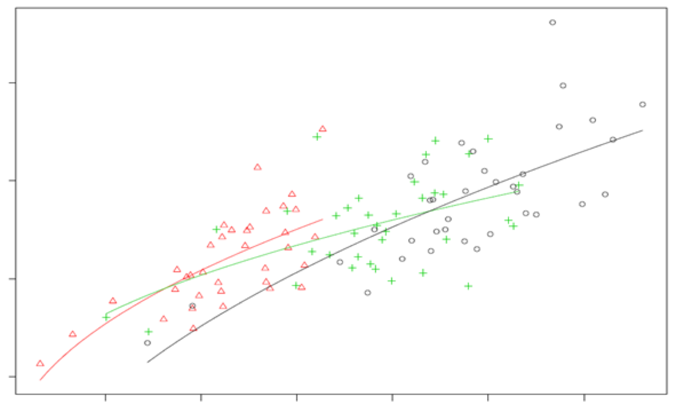

Results, Test vs Control

So, with all that built up, the team I managed executed on the opportunities on the 2nd page, and was flowing the PageRank across the different product/keyword pages. We managed to get a good improvement in rankings, but nothing says more than to show the money.

Below you see a graph of keyword sets we managed across the platform, where we tracked the test keywords which were getting “juiced up” vs control keywords.

It was estimated the upside we would get from the platform in the US alone, was about $20 million. Expanding the capabilities and going global, would have added to this number to the extent of 3-4x.

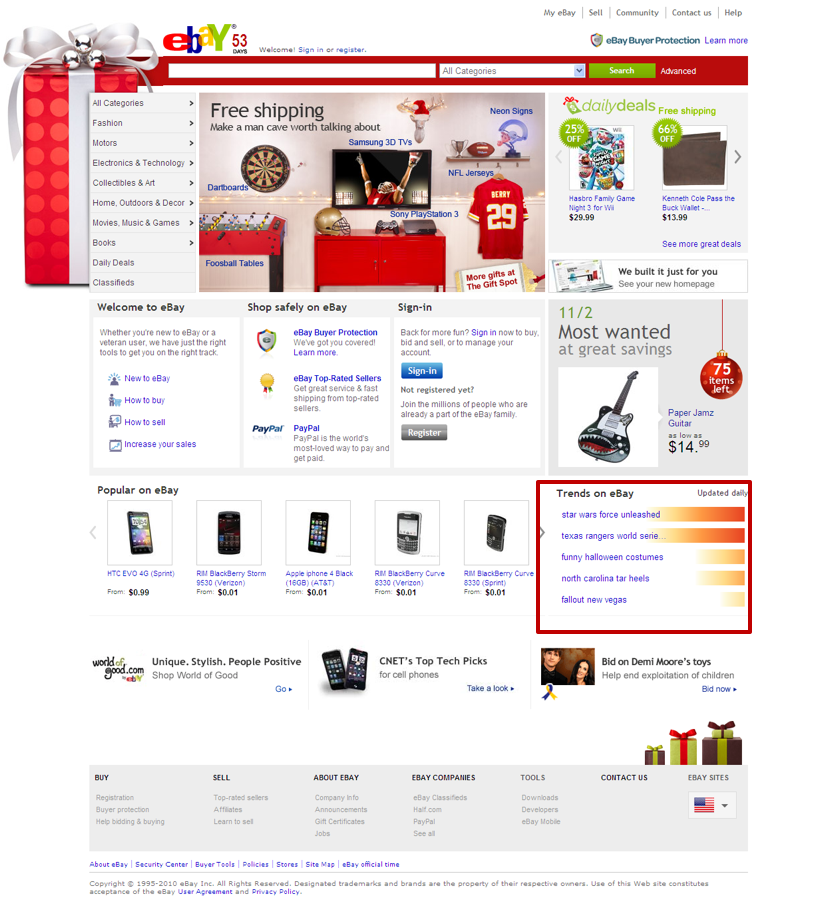

The internal linking was so successful to push certain pages into the SERP’s, we even managed a module on the homepage for a short time. The Trends on eBay module as you see below here was flowing through the SEO system, where the SEO team had full control over which page would be linked from the trending keyword. (the keywords were actually breaking out of their normal trend, these were not fabricated).

It goes without saying that the pages linked from the eBay homepage would get an immediate boost in rankings, and hence traffic. The opportunity to leverage this module to drive growth was beyond a number of tools I’ve worked with in the past. The eBay homepage is very powerful when it comes to link equity, and when combined with products going hot on social media or search, being able to take a piece of the action was just very exciting.

Conclusion

Even after working for several hours to try to best describe the 2nd page poaching, internal linking system we built at eBay, I still think I’m missing aspects in the post which might not be completely clear. I’ve lived this so long, that for me it’s easy to comprehend, however I might have missed out on some details. Please let me know in the comments if you have any questions, I will be able to jump in and clear anything up.

My view on Technical SEO is more than only making the site work properly. I will always be looking for ways to enhance the products in ways which also contributes to growth in SEO traffic. This may include highly technical projects like the one described here. This is why I believe Technical SEO is more than just “fixing” the site, it’s the way the product works.

What’s next; I have two more technical blogposts upcoming. One describing the way we worked on expired item pages on eBay, and how to best handle these, the other is focused on a particular implementation of the Canonical URL tag on SourceForge. Stay tuned for these two.

I also recommend you read the post by Dave Snyder on Technical SEO.

A fantastic insight into the immediate ROI that can be gained from properly implemented technical SEO. This quickly measurable side of organic search marketing is where SEO can really be pitted side by side with PPC and other paid customer acquisition methods.

Thanks Dennis.

Nothing says game-changer like a spammy 2009 tactic that literally EVERY ecommerce site already uses

It’s also worth noting that eBay got hit with a manual penalty, which tends to happen when you put SEO above all else.

Not a spammy tactic. Also, here’s a reference that will help you use the word “literally” correctly next time:

http://theoatmeal.com/comics/literally

And, the manual penalty had nothing to do with internal linking.

Hi Dennis.

Did you use scraped Toolbar PageRank for this? If you were doing this today and in the absence of TBPR, would you recommend Moz’s PA score or something else?

Hi. Any ideas on this?

“Like so many of my peers in the SEO community, I read an article on Technical SEO on SearchEngineLand and was immediately disappointed this article even made it through the quality control of their editorial team.”

Speaking on behalf of the digital marketing publishers of the world, stuff makes it through from time to time, and the testament to sound publishing is how the SEL team learns and adjusts from letting something slip through the cracks (for this post or any other one out there).

I know with us, it’s made our digital editorial team much stronger.

Good points Loren – it’s hard to be on top of it all when it gets published. The key is to take in the feedback and adapt.

Hey Dennis – good article. I just wanted to add a couple of points. I have recently been giving presentations on this very subject. It includes the internal linking concept, but we also look at the content itself (for those pages ranking on 2nd page of Google) and often do some semantic keyword replacement as well as fleshing out the content.

Between those three enhancements, we have seen some incredible traffic lifts. The pages often get a bump to page 1 and they rank for many more keyword phrases.

-Arnie

Yes Arnie, those three can be very beneficial for your rankings. I only described the linking optimization in this post, but the platform had many other features we built into it.

I’m planning to do 2 follow up posts on those.

Great post Dennis!

Apart from the old post at thegooglecache.com, where can I find other explanations of how to put in practice the Second Page Poaching Theory?

Reading this makes my deicsions easier than taking candy from a baby.

Easy to understand and the latest information about the topic has made me your true follower.

Hey,

Firstly, congratulations and thanks for writing this amazing post about the “Technical SEO”.

In this era, your blog post and writing still give good information to the readers like us.

I am also a blogger and looking for that kind of post where I can read and understand tech SEO easily and believe me the points you shared with easy writing are marvelous.

Keep up your writing.